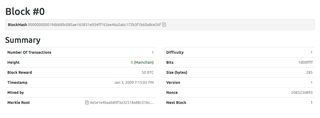

Oh my Satoshi

2008 ~

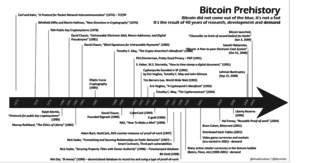

Nick Szabo:

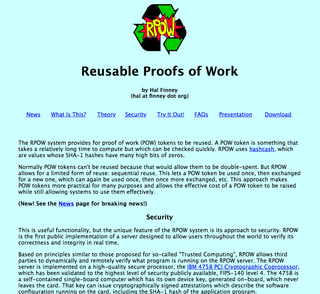

"Bitcoin is not a list of cryptographic features, it’s a very complex system of interacting mathematics and protocols in pursuit of what was a very unpopular goal. While the security technology is very far from trivial, the why

was by far the biggest stumbling block – nearly everybody who heard the general idea thought it was a very bad idea. Myself, Wei Dai, and Hal Finney were the only people I know of who liked the idea (or in Dai’s case his related idea) enough to pursue it to any significant extent until Nakamoto (assuming Nakamoto is not really Finney or Dai). Only Finney (RPOW) and Nakamoto were motivated enough to actually implement such a scheme."

Source:

https://www.gwern.net/Bitcoin-is-Worse-is-Better

Re: Bitcoin P2P e-cash paper

Mon, 17 Nov 2008

I believe I’ve worked through all those little details over the last year and a half while coding it, and there were a lot of them.

The functional details are not covered in the paper, but the sourcecode is coming soon.

I sent you the main files.

(available by request at the moment, full release soon)

Satoshi Nakamoto

Re: Bitcoin P2P e-cash paper

Satoshi Nakamoto

Fri, 14 Nov 2008 14:29:22 -0800

Hal Finney wrote: > I think it is necessary that nodes keep a separate > pending-transaction list associated with each candidate chain. > ... One might also ask ... how many candidate chains must > a given node keep track of at one time, on average?

Fortunately, it's only necessary to keep a pending-transaction pool for the current best branch. When a new block arrives for the best branch, ConnectBlock removes the block's transactions from the pending-tx pool. If a different branch becomes longer, it calls DisconnectBlock on the main branch down to the fork, returning the block transactions to the pending-tx pool, and calls ConnectBlock on the new branch, sopping back up any transactions that were in both branches. It's expected that reorgs like this would be rare and shallow.

With this optimisation, candidate branches are not really any burden. They just sit on the disk and don't require attention unless they ever become the main chain.

> Or as James raised earlier, if the network broadcast > is reliable but depends on a potentially slow flooding > algorithm, how does that impact performance?

Broadcasts will probably be almost completely reliable. TCP transmissions are rarely ever dropped these days, and the broadcast protocol has a retry mechanism to get the data from other nodes after a while. If broadcasts turn out to be slower in practice than expected, the target time between blocks may have to be increased to avoid wasting resources. We want blocks to usually propagate in much less time than it takes to generate them, otherwise nodes would spend too much time working on obsolete blocks.

I'm planning to run an automated test with computers randomly sending payments to each other and randomly dropping packets.

> 3. The bitcoin system turns out to be socially useful and valuable, so > that node operators feel that they are making a beneficial contribution > to the world by their efforts (similar to the various "@Home" compute > projects where people volunteer their compute resources for good causes). > > In this case it seems to me that simple altruism can suffice to keep the > network running properly.

It's very attractive to the libertarian viewpoint if we can explain it properly. I'm better with code than with words though.

Satoshi Nakamoto

The Cryptography Mailing List Unsubscribe by sending "unsubscribe cryptography" to [EMAIL PROTECTED]

Source:

mail-archive.com/cryptography@metzdowd.com

Boltzmann Brains, consciousness and the arrow of time

published by Hal Finney 31/12/2008

Sometimes we consider here the nature of consciousness, whether observer moments need to be linked to one another, the role of causality in consciousness, etc. I thought of an interesting puzzle about Boltzmann Brains which offers a new twist to these questions. As most readers are aware, Boltzmann Brains relate to an idea of Boltzmann on how to explain the arrow of time. The laws of physics seem to be time symmetric, yet the universe is grossly asymmetric in time. Boltzmann proposed that if you had a universe in a maximum entropy state, say a uniform gas, then given enough time, the gas would undergo fluctuations to regions of lower entropy. Sometimes, purely at random, clumps of molecules would happen to form. Even more rarely, these clumps might be large and ordered. Given infinite time, one could even have an entire visible-universe worth of matter clump together in an ordered fashion, from which state it would then decay into higher entropy conditions. Life could evolve during this decay, observe the universe around it, and find itself in conditions much like our own.

The Boltzmann Brain is a counter-argument, suggesting that the universe and everything else is redundant; all you need is a brain to form via a spontaneous random fluctuation, and to hold together long enough to engage in a few moments of conscious thought. Such a Boltzmann Brain is far more likely to form than an entire universe, hence the vast majority of conscious thoughts in such a model will be in Boltzmann Brains and not in brains in large universes. If we were tempted to explain the arrow of time in this way, we must accept that the universe is an illusion and that we are actually Boltzmann Brains, a conclusion which most people don't like.

Now this scenario can be criticized in many ways, but I want to emphasize a couple of points which aren't always appreciated. The first is that the Boltzmann scenario, whether a whole universe or just a Brain is forming, is basically time symmetric. That means that if you saw a movie of a Boltzmann universe forming and then decaying back to random entropy, you would not be able to tell which way the movie was running, if it were to be reversed. (This is an unavoidable consequence of the time symmetry of the underlying physics.) It follows that while the universe is moving into the low-entropy state, it must be evolving backwards. That is, an observer from outside would see time appearing to run backwards. Eggs would un-scramble themselves, objects would fall upwards from the ground, ripples would converge on spots in lakes from which rocks would then leap from the water, and so on.

At some point this time reversal effect would stop, and the universe would then proceed to evolve back into a high entropy state, now with time going "forwards". Now, the forward phase will not in general be an exact mirror image of the reverse, because of slight random fluctuations and the like, but it will be an alternate path that essentially starts with the same initial conditions. So we will see one path backwards into the minimum-entropy state, and another path forwards from that state. Both paths are fully plausible histories and neither is distinguishable from the other as far as which was reversed and which was forward, if you ran a recording of the whole process backwards.

One might ask, what causes time to run backwards during the first half of the Boltzmann scenario? The answer is, nothing but very, very odd luck. Time is no more likely to continue to run backwards, or to run backwards the same everywhere in the local fluctuation-area, than it is to start running backwards right now in the universe around you. Nothing stops eggs from unscrambling themselves except the unlikelihood, and the same principle is at work during the Boltzmann time-reversal phase. It is merely that we select, out of the infinity of time, those rare occasions where time does in fact "happen to happen" like this, that allows us to discuss it.

I want to emphasize that this picture of how Boltzmann fluctuations would work is a consquence of the laws of thermodynamics, and time symmetry. Sometimes people imagine that the fluctuation into the Boltzmann low-entropy state is fundamentally different from the fluctuation out of it. They accept that the fluctuation out will be similar to our own existence, with complex events happening. But they imagine that the fluctuation into low entropy might be much simpler, molecules simply aggregating together into some convenient state from which the complex fluctuation out and back to chaos can begin. While this is not impossible and hence will happen occasionally among the infinity of fluctuations in the Boltzmann universe, it will be rare. It will be no more common for a "simple" fluctation-in process to occur than for a simple fluctuation-out process. In our universe, knowing it will evolve to a chaotic heat death, we might imagine that molecules would just fly apart into chaos, but we know that is highly unlikely. Instead, by far the most likely path is a complex one, full of turbulence and reactions and similar activity. By time symmetry, exactly the same arguments apply during the fluctation-in phase. The vast majority of Boltzmann fluctuations that achieve a particular degree of low entropy will do so via complex, turbulent paths which if viewed in reverse will appear to be perfectly plausible sequences of events for a universe which is decaying from order to disorder, like our own.

Following on to this, let us consider the nature of consciousness during these Boltzmann excursions. Again let us focus on larger scale ones than just Boltzmann Brains, although the same principles apply there. During the time reversal phase, if conscious entities are present, their brains are running backwards. They are talking backwards, walking backwards, doing everything in reverse. They remember things that are coming in the future, and forget everything as soon as it has happened.

The question is, is there any difference in consciousness during the reverse and forward phases? Consider that during the forward phase, we started with a low entropy state, and now the laws of physics are playing out just as they do in our own universe. Everything is happening for a reason, depending on what has happened before. Events cause memories to appear in brains by virtue of the same causal effects which give rise to our own memories. Hence I imagine that most would agree that brains during the forward phase are conscious.

However, during the reverse phase, things are quite different. Brains have memories of things that haven't happened yet. Again, one might ask how this can be. The reason is because we stop paying attention to fluctuations where this doesn't happen. We only focus on Boltzmann fluctuations which take the universe into a plausible and consistent low-entropy state, one from which things can evolve in a way that is similar to what we see. When a brain remembers something, if that doesn't happen, the fluctuation is inconsistent. We skip over that one and look for one that is consistent.

In the consistent fluctuations, brain memories turn out to be correct, purely by luck. Similarly, every internal function of the brain which we might attribute to macroscopic-type causality, like neuron A firing because neuron B fired, will happen instead by luck, with neuron A firing as though neuron B is going to fire, and then neuron B just happening to fire in precisely the anticipated way.

The point is that during the time-reversal phase, causality as we normally think of it is absent. Subjectively-past events do not cause subjectively-future ones; rather, subjectively-future events take place before subjectively-past events, and it is merely through luck that things happen in a consistent pattern. Again, if we hadn't gotten lucky so that things work out, we wouldn't have called this a Boltzmann fluctuation of the kind we are interested in (Boltzmann Brain or Boltzmann Universe). By paying selective attention to only those fluctuations where things work, we will only observe cases where luck, rather than causality, makes things happen.

But things do happen, in the same pattern they would if causality were active. So the question is, are brains conscious during this time? Do the thoughts that occur during the time reversal (which recall is not exactly the same as what happens during the forward-time phase) have the same level of subjective reality as thoughts which occur when time runs forward?

We can argue it either way. In favor of consciousness, the main argument is that time is fundamentally symmetric (we assume). Hence there is no fundamental or inherent difference between the forward and reverse phases. The only differences are relative, with the arrow of time pointing in opposite directions in the two phases. But within each phase, we see events which can both be equally well described as leading to consciousness, and therefore conscious experiences will occur in both phases.

On the other side, many people see a role for causality in the creation or manifestation of consciousness. And arguably, causality is different in the two phases. In the forward phase (the part where we are returning from a low-entropy excursion to the high-entropy static state), events follow one another for the usual reasons, and it is correct to attribute a role for causality just as we do in our own experience. But in the reverse phase, it is purely by luck that things happen in a consistent way, and only because we have an infinity of time to work with that we are able to find sequences of events that look consistent even they arose by simple happenstance. There is no true causality in this phase, just a random sequence of events where we have selected a sequence that mimics causality. And to the extent that consciousness depends on causality, we should not say that brains during this reverse phase are conscious.

I lean towards the first interpretation, for the following reason. If consciousness really was able to somehow distinguish the forward from reverse phases in a Boltzmann fluctuation, it would be quite remarkable. Given that the fundamental laws of physics are time symmetric, nothing should be able to do that, to deduce a true "implicit" arrow of time that goes beyond the superficial arrow of time caused by entropy differences. The whole point of time symmetry, the very definition, is that there should be no such implicit arrow of time. This suggestion would seem to give consciousness a power that it should not have, allow it to do something that is impossible.

And if the first interpretation is correct, it seems to call into question the very nature of causality, and its posible role in consciousness. If we are forced to attribute consciousness to sequences of events which occur purely by luck, then causality can't play a significant role. This is the rather surprising conclusion which I reached from these musings on Boltzmann Brains.

Hal Finney

Source: https://groups.google.com/forum/#!topic/everything-list..

Announcement

Oct. 31, 2008

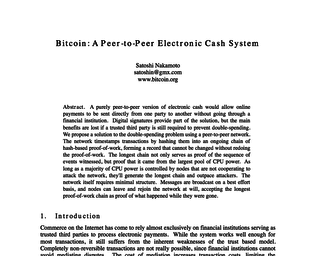

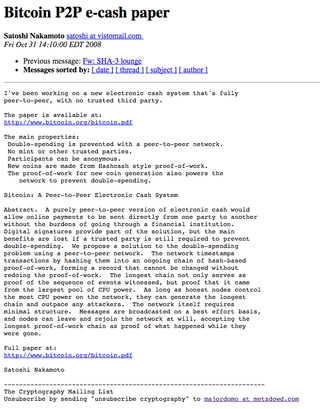

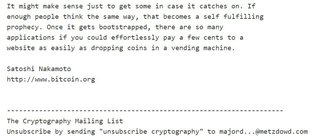

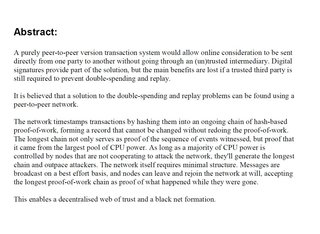

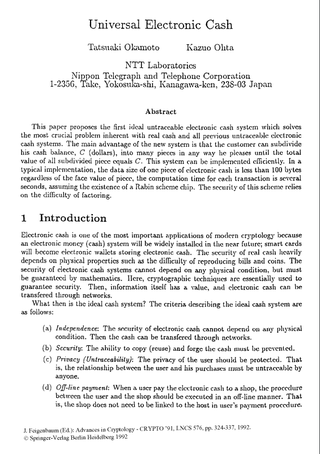

Someone using the name Satoshi Nakamoto makes an announcement on The Cryptography Mailing list at metzdowd.com: "I've been working on a new electronic cash system that's fully peer-to-peer, with no trusted third party. The paper is available at http://www.bitcoin.org/bitcoin.pdf

This link leads to the now-famous white paper published on bitcoin.org entitled "Bitcoin: A Peer-to-Peer Electronic Cash System." This paper detailed methods of using a peer-to-peer network to generate what was described as "a system for electronic transactions without relying on trust". This paper would become the Magna Carta for how Bitcoin operates today.

Source

Bitcoin P2P e-cash paper

Satoshi Nakamoto satoshi at vistomail.com

Fri Oct 31 14:10:00 EDT 2008

I've been working on a new electronic cash system that's fully

peer-to-peer, with no trusted third party.

The paper is available at:

http://www.bitcoin.org/bitcoin.pdf

The main properties:

- Double-spending is prevented with a peer-to-peer network.

- No mint or other trusted parties.

- Participants can be anonymous.

- New coins are made from Hashcash style proof-of-work.

- The proof-of-work for new coin generation also powers the network to prevent double-spending.

Bitcoin: A Peer-to-Peer Electronic Cash System

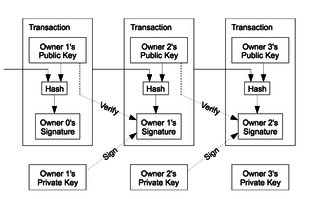

Abstract. A purely peer-to-peer version of electronic cash would

allow online payments to be sent directly from one party to another

without the burdens of going through a financial institution.

Digital signatures provide part of the solution, but the main

benefits are lost if a trusted party is still required to prevent

double-spending. We propose a solution to the double-spending

problem using a peer-to-peer network. The network timestamps

transactions by hashing them into an ongoing chain of hash-based

proof-of-work, forming a record that cannot be changed without

redoing the proof-of-work. The longest chain not only serves as

proof of the sequence of events witnessed, but proof that it came

from the largest pool of CPU power. As long as honest nodes control

the most CPU power on the network, they can generate the longest

chain and outpace any attackers. The network itself requires

minimal structure. Messages are broadcasted on a best effort basis,

and nodes can leave and rejoin the network at will, accepting the

longest proof-of-work chain as proof of what happened while they

were gone.

Full paper at:

http://www.bitcoin.org/bitcoin.pdf

Satoshi Nakamoto

---------------------------------------------------------------------

The Cryptography Mailing List

http://www.metzdowd.com/pipermail/cryptography/2008-October/014810.html

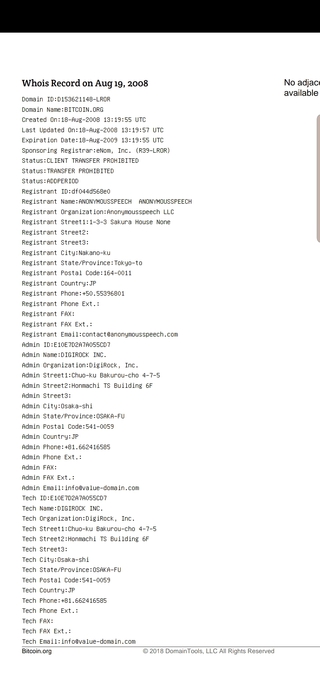

bitcoin.org

On 18 August 2008, the domain name bitcoin.org is registered. Today, at least, this domain is "WhoisGuard Protected," meaning the identity of the person who registered it is not public information.

Source

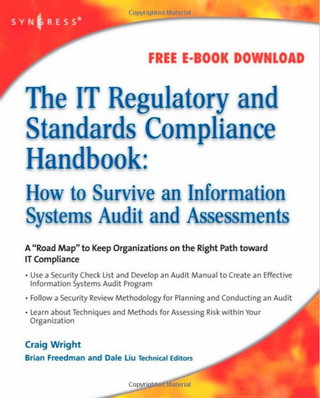

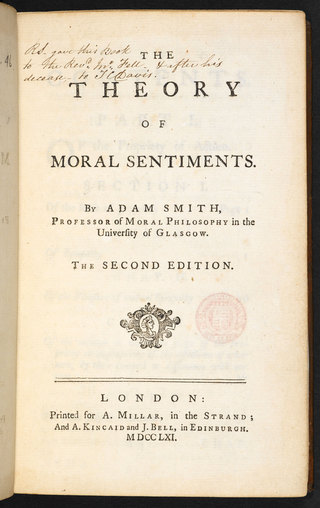

"An electronic signature, in the form of a digital signature, may satisfy the functional requirements of the law of contracts. It must be noted that the signature itself does not afford sufficient proof of the signatory’s identity. Further evidence is required which links the public key (or other method) used by the party. The adducing of additional extrinsic evidence such as is commonly employed when seeking to determine the identity associated with a signature on a manuscript may be used to provide proof"

Craig Wright, 2008

The IT Regulatory & Standards Compliance Handbook: How to Survive an Information Systems Audit & Assessments

Chapter 21, page: 620

Source

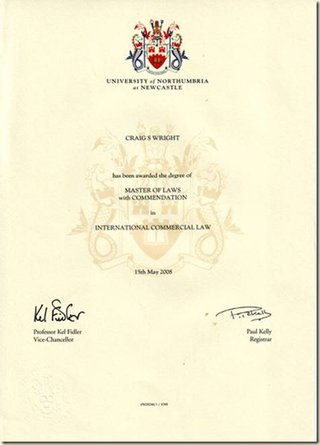

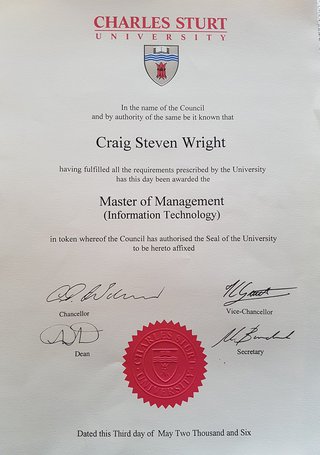

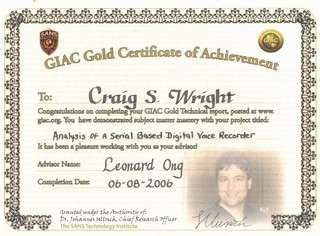

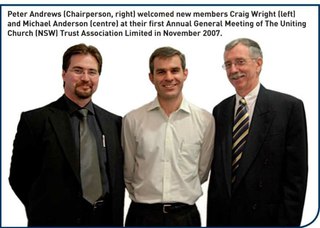

Craig Wright

Craig has personally conducted in excess of 1,200 IT security related engagements for more than 120 Australian and international organizations in the private and government sectors and now works for BDO Kendall's in Australia. These engagements have comprised of security systems design (including the design of critical network infrastructure), IT audit, systems implementation, staff training and mentoring, cross functional team development, policy and procedural development, business process analysis and digital forensics. In addition to his consulting engagements, Craig has authored numerous IT security related articles and is a co-author of "The Official CHFI Study Guide (Exam 312-49)".

Craig has been involved with designing the architecture for the world's first online casino (Lasseter's Online) in the Northern Territory, designed and managed the implementation of many of the systems that protect the Australian Stock Exchange and also developed and implemented the security policies and procedural practices within Mahindra and Mahindra, India's largest vehicle manufacturer. Craig holds (amongst others) the following industry certifications, CISSP (ISSAP & ISSMP), CISA, CISM, CCE, MCSE, GIAC GNSA, G7799, GWAS, GCFA, GLEG, GSEC, GREM, GPCI and GSPA and has completed numerous degrees in a variety of fields. He is currently completing both a Masters degree in Statistics (at Newcastle) and a Masters Degree in Law (LLM) specialising in International Commercial Law (E-commerce Law).

Craig is planning to start his second doctorate, a PhD in Economics and Law in the digital age in early 2008.

Source: http://www.sans.org/instructors/craig-wright

My Latest Plan

Posted by Craig Wright at Monday, August 25, 2008

Unlike most people, I have realised the value of time from when I was a youth. My latest adition of goals is to listen to the 90,000 most influential pieces of music throughout history (as judged by myself).

- Tonight I have Hildegard Von Bingen playing. In this case Canticles Of Ecstasy. This consists of the following works:

- Vis Aeternitatis

- Nunc Aperuit Nobis

- Quia Ergo Femina Mortem Instruxit

- Cum Processit Factura Digiti Dei

- Alma Redemptoris Mater

- Ave Maria, O Auctrix Vite

- Spiritus Sanctus Vivificans Vite

- Ignis Spiritus Paracliti

- Caritas Habundat In Omnia

- Virga Mediatrix

- Viridissima Virga, Ave

- Instrumentalstück Instrumental Piece

- Pastor Aminarum

- Tu Suavissima Virga

- Choruscans Stellarum

- Nobilissima Viriditas

This is a 20 year plan.

Hildegard of Bingen was born in 1084 and at 14 entered a Benedictine nunnery outside of Worms (the Rhineland). She became the Abbess in 1136 and subseqently moved her order to Rupertsberg - outside of Bingen.

She composed 77 vocal works (including 43 Antiphons) collectively known as the Symphonia armonie celestium revelationum.

This is a truely mystic collection of vocal works. A great reflective collection.

On top of this I alsolistened to Symphony No. 8 from Dimitri Shostakovich. This was the 1988 preformance conducted by Yevgeny Mravinsky. This is reflective, bitterly powerful and emotionally transcandent. This is a dark and brooding work reflecting a true depth of emotion and experiances I can not begin to comprehend.

Yet in it lies hope.

Tomorrow...

George Frideric Handel - Messiah (1742)

Source:

http://archive.is/MUfVz

CSW 09:43 Feb 6, 2020

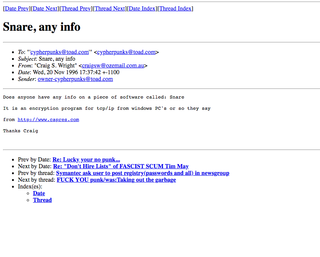

BITCOIN WAS NOT EVER LAUNCHED INTO THE CYPHERPUNK COMMUNITY!

There is a Cypherpunk mailing list - Bitcoin was NEVER announced there. I was on that list. - And I did not use it for Bitcoin

The list used was a common general list used originally by MANY in the field - even those in the NSA and DHS.

The Toad Cypherpunk list is not the Cryptography list http://ftp.arnes.si/packages/crypto-tools/cypherpunks/mailing_list/

These are separate.

Bitcoin is a new design for a fully peer-to-peer electronic cash system. A C++ implementation is under development for release as an open source project.

https://web.archive.org/web/20090131115053/http://bitcoin.org/

Note:

The C++ implementation is released as an Open Source Project - NOT the protocol!

It DOES matter where we are now...

As - YOU ARE USING MY IP - and I maintain those rights.

And, I believe and respect LAW

What is not relevant - Kraken and the drive to be outside the law and profit from Money laundering.

You want 1984 level Cherry picking - That thread https://twitter.com/danheld/status/...

Bitcoin was NOT created for the financial crisis. It was started well before that - AND that shit occured right when I launched Bitcoin.

Again - BITCOIN had NOTHING to do with CypherPunks

-

Bob 13:57

As a software developer I always found it strange that Satoshi managed to deliver Bitcoin right on time for the financial crisis :lächeln: but I didn't turn much attention to this anomaly back then (so I believed the popular narrative).

Alice

I always figured it must have been a fairly long process to think out all the relevant parts of Bitcoin. Years. Not months or weeks. So i never swallowed that BS about the financial crisis being the trigger for Bitcoin. Historical anomaly. Circumstance. An idea who’s time had come. (bearbeitet)

CSW

It was years

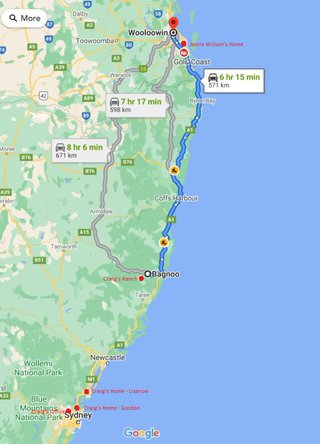

And the international banking crisis really was when the collapse of the investment bank Lehman Brothers occurred. This was on September 15, 2008.

I had already sent copies of the draft paper to multiple people by August 2008 and registered the domain etc well before Sept 2008.

I am NOT omniscient- I did not foresee Lehman Bro's

The system was close to ready in Nov 2008 and was known BEFORE the Sept 2008 collapse.

Hence - BitCoin had NOTHING at all to do with the GFC. It was not about banking. YES, I did not like what Chancellor Darling was saying...He wanted to reverse the reforms Thatcher introduced and nationalise the banks.

I LIKE banks - I just do not like the shit they have been doing - as a result of leftist BS about all people DESERVING a home loan.

Source:

Metanet.ico Slack on Feb 6, 2020

WEDNESDAY, 6 AUGUST 2008

What is NON-REPUDIATION

Non-repudiation is the process of ensuring that a parties to a transaction cannot deny (this is repudiate) that a transaction occurred.

Repudiation is an assertion refuting a claim or the refusal to acknowledge an action or deed. Anticipatory repudiation (or anticipatory breach) describes a declaration by the promising party (as associated with a contract) that they intend to fail to meet their contractual obligations.

Posted by Craig Wright at Wednesday, August 06, 2008

Source:

http://archive.is/YmOdF#selection-4649.0-4717.1

AN AUGUST 2008 POST ON WRIGHT’S BLOG, MONTHS BEFORE THE NOVEMBER 2008 INTRODUCTION OF THE BITCOIN WHITEPAPER ON A CRYPTOGRAPHY MAILING LIST. IT MENTIONS HIS INTENTION TO RELEASE A “CRYPTOCURRENCY PAPER,” AND REFERENCES “TRIPLE ENTRY ACCOUNTING,” THE TITLE OF A 2005 PAPER BY FINANCIAL CRYPTOGRAPHER IAN GRIGG THAT OUTLINES SEVERAL BITCOIN-LIKE IDEAS.

A POST ON THE SAME BLOG FROM NOVEMBER, 2008. IT INCLUDES A REQUEST THAT READERS WHO WANT TO GET IN TOUCH ENCRYPT THEIR MESSAGES TO HIM USING A PGP PUBLIC KEY APPARENTLY LINKED TO SATOSHI NAKAMOTO. A PGP KEY IS A UNIQUE STRING OF CHARACTERS THAT ALLOWS A USER OF THAT ENCRYPTION SOFTWARE TO RECEIVE ENCRYPTED MESSAGES. THIS ONE, WHEN CHECKED AGAINST THE DATABASE OF THE MIT SERVER WHERE IT WAS STORED, IS ASSOCIATED WITH THE EMAIL ADDRESS SATOSHIN@VISTOMAIL.COM, AN EMAIL ADDRESS VERY SIMILAR TO THE SATOSHI@VISTOMAIL.COM ADDRESS NAKAMOTO USED TO SEND THE WHITEPAPER INTRODUCING BITCOIN TO A CRYPTOGRAPHY MAILING LIST.

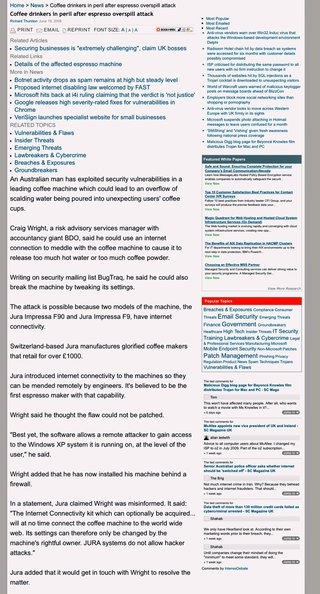

Hacking Coffee Makers.

Jun 17 2008 01:28AM

Craig Wright (Craig Wright bdo com au)

Hi All,

I have a Jura F90 Coffee maker with the Jura Internet Connection Kit. The idea is to:

"Enable the Jura Impressa F90 to communicate with the Internet, via a PC.

Download parameters to configure your espresso machine to your own personal taste.

If there's a problem, the engineers can run diagnostic tests and advise on the solution without your machine ever leaving the kitchen."

Guess what - it can not be patched as far as I can tell ;) It also has a few software vulnerabilities.

Fun things you can do with a Jura coffee maker:

1. Change the preset coffee settings (make weak or strong coffee)

2. Change the amount of water per cup (say 300ml for a short black) and make a puddle

3. Break it by engineering settings that are not compatible (and making it require a service)

The connectivity kit uses the connectivity of the PC it is running on to connect the coffee machine to the internet. This allows a remote coffee machine "engineer" to diagnose any problems and to remotely do a preliminary service.

Best yet, the software allows a remote attacker to gain access to the Windows XP system it is running on at the level of the user.

Compromise by Coffee.

Regards,

Craig Wright GSE-Compliance

Craig Wright

Manager, Risk Advisory Services

Direct : +61 2 9286 5497

Craig.Wright (at) bdo.com (dot) au [email concealed]

+61 417 683 914

BDO Kendalls (NSW-VIC) Pty. Ltd.

Level 19, 2 Market Street Sydney NSW 2000

GPO BOX 2551 Sydney NSW 2001

Fax +61 2 9993 9497

http://www.bdo.com.au/

Bitcoin Origins

by Phillip James Wilson aka Scronty

http://vu.hn/

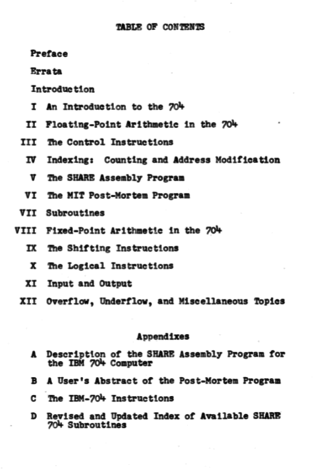

Table of Contents

Bitcoin Origins

(self.Bitcoin) submitted April? 2017 * by Scronty

Afternoon, All. Today marks the eighth anniversary of the publication of the Bitcoin white paper. As a special tribute, I will provide you with a short story on the origins of the Bitcoin tech. I've been out of the game for many years, however now I find myself drawn back - in part due to the energy that's being added by the incumbents, in part due to information that's become public over the past year. I haven't followed the Bitcoin and alt coin tech for the past five or six years. I left about six months before (2). My last communication with (2) was five years ago which ended in my obliteration of all development emails and long-term exile. Every mention of Bitcoin made me turn the page, change the channel, click away - due to a painful knot of fear in my belly at the very mention of the tech. As my old memories come back I'm jotting them down so that a roughly decent book on the original Bitcoin development may be created. The following are a few of these notes. This is still in early draft form so expect the layout and flow to be cleaned up over time. Also be aware that the initial release of the Bitcoin white paper and code was what we had cut down to from earlier ideas.

Source:

https://www.reddit.com/r/Bitcoin ...

Tominaga Nakamoto

From Wikipedia, the free encyclopedia

Tominaga Nakamoto (富永 仲基 Tominaga Nakamoto, 1715–1746) was a Japanese philosopher. He was educated at the Kaitokudō academy founded by members of the mercantile class of Osaka, but was ostracised shortly after the age of 15. Tominaga belonged to a Japanese rationalist school of thought and advocated a Japanese variation of atheism, mukishinron (no gods or demons). He was also a merchant in Osaka. Only a few of his works survive; his Setsuhei ("Discussions on Error") has been lost and may have been the reason for his separation from the Kaitokudō, and around nine other works' titles are known.[2] The surviving works are his Okina no Fumi ("The Writings of an Old Man"),[3] Shutsujō Kōgo ("Words after Enlightenment"; on textual criticism of Buddhist sutras), and three other works on ancient musical scales, ancient measurements, and poetry.

He took a deep critical stance against normative systems of thought, partially based on the Kaitokudō's emphasis on objectivity, but was clearly heterodox in eschewing the dominant philosophies of the institution. He was critical of Buddhism, Confucianism and Shintoism.[4] Whereas each of these traditions drew on history as a source of authority, Tominaga saw appeals to history as a pseudo-justification for innovations that try to outdo other sects vying for power. For example, he cited the various Confucian Masters who saw human nature as partially good, neither good nor bad, all good, and inherently bad; analysing later interpreters who tried to incorporate and reconcile all Masters. He criticised Shintoism as obscurantist, especially in its habit of secret instruction. As he always said, "hiding is the beginning of lying and stealing".[5][6] In his study of Buddhist scriptures, he asserted that Hinayana school of scriptures preceded Mahayana scriptures but also asserted that the vast majority of Hinayana scriptures are also composed much later than the life of Gautama Buddha, the position which was later supported by modern scriptural studies.

"Even though (2) and (3) weren't as high in the crypto world and as knowledgable as the folks I wanted to interact with, they had factors which placed them far above any of these others.

They were driven.

Especially (2)."

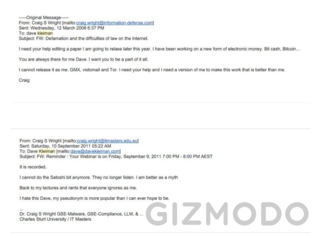

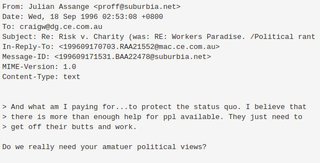

March 12th 2008

I need your help editing a paper I am going to release later this year. I have been working on a new form of electronic money. Bit cash, Bitcoin...

You are always there for me Dave. I want you to be part of it all.

I cannot release it as me. GMX, Vistomail and Tor. I need your help and I need a version of me to make this work that is better than me.

Craig

Source:

https://www.reddit.com/r/Bitcoin/co...

Source: https://www.gwern.net/docs/bitcoin/2008-nakamoto

W. Dai, "b-money"

From: "Satoshi Nakamoto" <satoshi@anonymousspeech.com>

Sent: Friday, August 22, 2008 4:38 PM

To: "Wei Dai" <weidai@ibiblio.org>

Cc: "Satoshi Nakamoto" <satoshi@anonymousspeech.com>

Subject: Citation of your b-money page

I was very interested to read your b-money page. I'm getting ready to release a paper that expands on your ideas into a complete working system. Adam Back (hashcash.org) noticed the similarities and pointed me to your

site.

I need to find out the year of publication of your b-money page for the citation in my paper. It'll look like:

[1] W. Dai, "b-money," http://www.weidai.com/bmoney.txt, (2006?).

You can download a pre-release draft at

http://www.upload.ae/file/6157/ecash-pdf.html Feel free to forward it to anyone else you think would be interested.

Title: Electronic Cash Without a Trusted Third Party

Abstract: A purely peer-to-peer version of electronic cash would allow

online payments to be sent directly from one party to another without the

burdens of going through a financial institution. Digital signatures

offer part of the solution, but the main benefits are lost if a trusted

party is still required to prevent double-spending. We propose a solution

to the double-spending problem using a peer-to-peer network. The network

timestamps transactions by hashing them into an ongoing chain of

hash-based proof-of-work, forming a record that cannot be changed without

redoing the proof-of-work. The longest chain not only serves as proof of

the sequence of events witnessed, but proof that it came from the largest

pool of CPU power. As long as honest nodes control the most CPU power on

the network, they can generate the longest chain and outpace any

attackers. The network itself requires minimal structure. Messages are

broadcasted on a best effort basis, and nodes can leave and rejoin the

network at will, accepting the longest proof-of-work chain as proof of

what happened while they were gone.

Satoshi

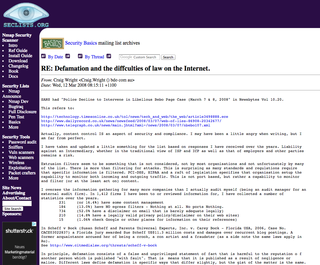

Source: http://seclists.org/basics/2008/Mar/147

RE: Defamation and the diffculties of law on the Internet.

<!--X-Subject-Header-End-->

<!--X-Head-of-Message-->

From: "dave kleiman" <dave () davekleiman com>

Date: Wed, 12 Mar 2008 03:25:13 -0400

<!--X-Head-of-Message-End-->

<!--X-Head-Body-Sep-Begin-->

<!--X-Head-Body-Sep-End-->

<!--X-Body-of-Message-->

Hats off to you Craig,

Sometimes you amaze me....I literally today just took on a case today

dealing exactly with this, you are making my life easy as I am gathering

(with your permission) this information you have provided for my client's

review.

When this becomes public record, I will post-up the results.

I will take any more information on this subject with great enthusiasm and

appreciation, as always!!!

By the way, for those of you who have never asked for Craig's help, you do

not know what you are missing.

I have asked his research assistance more than once. One particular time it

was dealing with abilities of cookies on the server side, when I awoke the

next morning, I had 100's of pages and links of information on that subject,

and variations and ideas I had not even or forgotten to consider (e.g. web

bugs). Why did he help, for no other than reason than he just likes to

research information, and possibly considers me a friend from afar. He

probably had as much fun reading up on the subject as did.

And along with all the technical details he included this:

Cookie Recipe Ingredients:

125 grams butter

50 grams caster sugar

60 grams brown sugar

1 large egg

1 teaspoon vanilla essence/extract

125 grams of plain flour

1/2 teaspoon salt

1/2 teaspoon bicarbonate of soda

250 grams of chocolate (Dark is best for this)

1/2 cup coarsely chopped almonds

Method: Turn your oven on to preheat at 180 degrees Celsius (about 350

degrees Fahrenheit, gas mark 4). Remember to take your grill pan out first.

Now get some baking trays ready (if they're not non-stick then you better

line them or grease them)......................Hey Presto! The world's BEST

cookies, in the comfort of your own home.

In the midst of this data exchange I casually mentioned that one day, when I

was in the position to not have to work so much, I would return to school

and my dream degrees in Cosmology and Astrophysics. Of course, the next day

I had links to every online study available for those degrees, with a "why

wait wink."

Further, it amazes me how Craig has a Blog helping to understand the rights

of US based Digital Forensic Examiners:

http://gse-compliance.blogspot.com/2008/01/texas-pi-fud.html

And he is based in AU. He simply cares enough about the cause and the

industry to help, it has no direct affect on him if US DFEs are required to

have PI licenses!!

People of the past considered "Loons":

(Feynman, Hawking, Sagan, da Vinci, Einstein, Columbus, everyone associated

with Monty Python and the Holy Grail:

Black Knight: Right, I'll do you for that!

King Arthur: You'll what?

Black Knight: Come here!

King Arthur: What are you gonna do, bleed on me?

Black Knight: I'm invincible!

King Arthur: ...You're a loony.

.......you get the picture)

Yep Craig is a Junkie; a Knowledge Junkie!!!!

For those of you who have nothing good to say; why say anything?

Dave

Respectfully,

Dave Kleiman - http://www.davekleiman.com

4371 Northlake Blvd #314

Palm Beach Gardens, FL 33410

561.310.8801

-----Original Message-----

From: listbounce () securityfocus com

[<a href="mailto:listbounce" rel="nofollow">mailto:listbounce</a> () securityfocus com] On Behalf Of Craig Wright

Sent: Tuesday, March 11, 2008 17:15

To: 'Simphiwe Mngadi'; security-basics () securityfocus com

Subject: RE: Defamation and the diffculties of law on the Internet.

SANS had "Police Decline to Intervene in Libellous Bebo Page Case

(March 7 & 8, 2008" in Newsbytes Vol 10.20.

This refers to:

<a href="http://technology.timesonline.co.uk/tol/news/tech_and_web/the_web/ar" rel="nofollow">http://technology.timesonline.co.uk/tol/news/tech_and_web/the_web/ar</a>

ticle3498888.ece

<a href="http://www.dailyrecord.co.uk/news/newsfeed/2008/03/07/web-of-lies-" rel="nofollow">http://www.dailyrecord.co.uk/news/newsfeed/2008/03/07/web-of-lies-</a>

86908-20342677/

<a href="http://www.telegraph.co.uk/news/main.jhtml?xml=/news/2008/03/07/nbeb" rel="nofollow">http://www.telegraph.co.uk/news/main.jhtml?xml=/news/2008/03/07/nbeb</a>

o107.xml

Actually, content control IS an aspect of security and compliance. I

may have been a little angry when writing, but I am far from

perfect.

I have taken and updated a little something for the list based on

responses I have received over the years. Liability against an

Intermediary, whether in the traditional view of ISP and ICP as well

as that of employers and other parties remains a risk.

Extrusion filters seem to be something that is not considered, not

by most organisations and not unfortunately by many of the list.

There is more than filtering for attacks. This is surprising as many

standards and regulations require that specific information is

filtered. PCI-DSS, HIPAA and a raft of legislation specifies that

organisation setup the capability to monitor both incoming and

outgoing traffic. This is not port based, but rather a capability to

monitor and filter (or at the least act on) content.

I oversee the information gathering for many more companies than I

actually audit myself (being an audit manager for an external audit

firm). In 1,412 firms I have been to or reviewed information for, I

have collected a number of statistics over the years.

231 (or 16.4%) have some content management

184 (13.0%) have NO egress filters - Nothing at all. No

ports Nothing.

734 (52.0% have a disclaimer on email that is barely

adequate legally)

210 (14.8% have a legally valid privacy

policy/disclaimer on their web sites)

15 (1.06% check Google or other places for information

on their references)

In Scheff v Bock (Susan Scheff and Parents Universal Experts, Inc.

v. Carey Bock - Florida USA, 2006, Case No. CACE03022837) a Florida

jury awarded Sue Scheff US$11.3 million costs and damages over

recurrent blog postings. A former acquaintance accused her of being

a crook, a con artist and a fraudster (as a side note the same laws

apply in Au).

See <a href="http://www.citmedialaw.org/threats/scheff-v-bock" rel="nofollow">http://www.citmedialaw.org/threats/scheff-v-bock</a>

In principle, defamation consists of a false and unprivileged

statement of fact that is harmful to the reputation o f another

person which is published "with fault". That is means that it is

published as a result of negligence or malice. Different laws define

defamation in specific ways that differ slightly, but the gist of

the matter is the same. Libel is a written defamation; slander is a

verbal defamation.

Some examples:

Libellous (when false):

Charging someone with being a communist (in 1959)

Calling an attorney a "crook"

Describing a woman as a call girl

Accusing a minister of unethical conduct

Accusing a father of violating the confidence of son

Not-libellous:

Calling a political foe a "thief" and "liar" in chance

encounter (because hyperbole in context)

Calling a TV show participant a "local loser," "chicken

butt" and "big skank"

Calling someone a "bitch" or a "son of a bitch"

Changing product code name from "Carl Sagan" to "Butt Head

Astronomer"

See <a href="http://w2.eff.org/bloggers/lg/faq-defamation.php" rel="nofollow">http://w2.eff.org/bloggers/lg/faq-defamation.php</a> for details.

So let us do the Math. Let us take a case of 0.1% (or 1 in a

thousand) employees (and the number is in reality higher then this)

posting from their place of work a defamatory post. 83.6% of

companies (based on figures above) will not detect or stop anything.

Less check at all.

Let us take an average US litigation cost for defamation of $182,500

(taking cases won from 96 to current in Au, UK and US) Also see

"Rethinking Defamation" by DAVID A. ANDERSON of the University of

Texas at Austin - School of Law.

(<a href="http://papers.ssrn.com/sol3/papers.cfm?abstract_id=976116#PaperDown" rel="nofollow">http://papers.ssrn.com/sol3/papers.cfm?abstract_id=976116#PaperDown</a>

load).

So if we take a decent sized company of 5,000 employees, we have an

expectation of 4 incidents per annum that in coming years would be

expected to make it to court. Employers are vicariously liable for

many of these actions. In the past, employers and ICP's have not

been targeted, but this is changing. The person doing the act is

generally not one with the funds to pay out the losses. The employer

is. Thus the ability to co-join employers will increase these types

of actions.

Facebook, blogs and other accesses will only make this worse in

coming years.

So what does this mean? Well in the case of our hypothetical

employer, there is an expected annualised loss of $788,400 US in

coming years. The maximum expected payout would be $50,000,000 US.

It is unlikely that the individual making the claim will be able to

pay the cost of losing, so the employer will more and more be added

to be suit.

Now, I am in no way affiliated with ANY content management software,

but I see this as a necessary evil. This would could as an effective

corporate governance strategy, lowering the potential liability of

the employer.

In my experience, the costs of the software and the management are

going to add to less then the potential. With the recent win in

Scheff v Bock, this is only going to increase.

Civil Liability

The conduct of both agents and employees can result in situations

where liability is imposed vicariously on an organisation through

both the common law[i] and by statute.[ii] The benchmark used to

test for vicarious liability for an employee requires that the deed

of the employee must have been committed during the course and

capacity of their employment under the doctrine respondeat superior.

Principals' liability will transpire when a `principal-agent'

relationship exists. Dal Pont[iii] recognises three possible

categories of agents:

(a) those that can create legal relations on behalf of a principal

with a third party;

(b) those that can affect legal relations on behalf of a principal

with a third party; and

(c) a person who has authority to act on behalf of a principal.

Despite the fact that a party is in an agency relationship, the

principal is liable directly as principal as contrasting to

vicariously, "this distinction has been treated as of little

practical significance by the case law, being evident from judges'

reference to principals as vicariously liable for their agents'

acts"[iv]. The consequence being that an agency arrangement will

leave the principle directly liable rather then liable vicariously.

The requirement for employees of "within the scope of employment" is

a broad term without a definitive definition in the law, but whose

principles have been set through case law and include:

where an employer authorises an act but it is performed using an

inappropriate or unauthorised approach, the employer shall remain

liable[v];

the fact that an employee is not permitted to execute an action is

not applicable or a defence[vi]; and the mere reality that a deed is

illegal does not exclude it from the scope of employment[vii].

Unauthorised access violations or computer fraud by an employee or

agent would be deemed remote from the employee's scope of employment

or the agent's duty. This alone does not respectively absolve the

employer or agent from the effects of vicarious liability[viii].

Similarly, it remains unnecessary to respond to a claim against an

employer through asserting that the wrong committed by the employee

was for their own benefit. This matter was authoritatively settled

in the Lloyd v Grace, Smith and Co.[ix], in which a solicitor was

held liable for the fraud of his clerk, albeit the fraud was

exclusively for the clerk's individual advantage. It was declared

that "the loss occasioned by the fault of a third person in such

circumstances ought to fall upon the one of the two parties who

clothed that third person as agent with the authority by which he

was enabled to commit the fraud"[x]. Lloyd v Grace, Smith and

Co.[xi] was also referred to by Dixon J in the leading Australian

High Court case, Deatons Pty Ltd v Flew[xii]. The case concerned an

assault by the appellant's barmaid who hurled a beer glass at a

patron. Dixon J stated that a servant's deliberate unlawful act may

invite liability for their master in situations where "they are acts

to which the ostensible performance of his master's work gives

occasion or which are committed under cover of the authority the

servant is held out as possessing or of the position in which he is

placed as a representative of his master"[xiii].

Through this authority, it is generally accepted that if an employee

commits fraud or misuses a computer system to conduct an illicit

action that results in damage being caused to a third party, the

employer may be supposed liable for their conduct. In the case of

the principles agent, the principle is deemed to be directly liable.

In the context of the Internet, the scope in which a party may be

liable is wide indeed. A staff member or even a consultant (as an

agent) who publishes prohibited or proscribed material on websites

and blogs, changes systems or even data and attacks the site of

another party and many other actions could leave an organisation

liable. Stevenson Jordan Harrison v McDonnell Evans (1952)[xiv]

provides an example of this type of action. This case hinged on

whether the defendant (the employer) was able to be held liable

under the principles of vicarious liability for the publication of

assorted "trade secrets" by one of its employees which was an

infringement of copyright. The employee did not work solely for the

employer. Consequently, the question arose as to sufficiency of the

"master-servant" affiliation between the parties for the conditions

of be vicarious liability to be met. The issue in the conventional

"control test" as to whether the employee was engaged under a

"contract for services", against a "contract of service" was

substituted in these circumstances with a test of whether the tort-

feasor was executing functions that were an "integral part of the

business" or "merely ancillary to the business". In the former

circumstances, vicarious liability would extend to the employer.

Similarly, a contract worker acting as web master for an

organisation who loads trade protected material onto their own blog

without authority is likely to leave the organisation they work for

liable for their actions.

In Meridian Global Funds Management Asia Limited v Securities

Commission[xv], a pair of employees of MGFMA acted without the

knowledge of the company directors but within the extent of their

authority and purchased shares with company funds. The issue lay on

the qualification of whether the company knew, or should have known

that it had purchased the shares. The Privy Council held that

whether by virtue of the employees' tangible or professed authority

as an agent performing within their authority[xvi] or alternatively

as employees performing in the course of their employment[xvii],

both the actions, oversight and knowledge of the employees may well

be ascribed to the company. Consequently, this can introduce the

possibility of liability as joint tort-feasors in the instance where

directors have, on their own behalf, also accepted a level of

responsibility[xviii] meaning that if a director or officer is

explicitly authorised to issue particular classes of representations

for their company, and deceptively issues a representation of that

class to another resulting in a loss, the company will be liable

even if the particular representation was done in an inappropriate

manner to achieve what was in effect authorised.

The degree of authority is an issue of fact and relies appreciably

on more than the fact of employment providing the occasion for the

employee to accomplish the fraud. Panorama Developments (Guildford)

Limited v Fidelis Furnishing Fabrics Limited[xix] involved a company

secretary deceitfully hiring vehicles for personal use without the

managing director's knowledge. As the company secretary will

customarily authorise contracts for the company and would seem to

have the perceptible authority to hire a vehicle, the company was

held to be liable for the employee's actions.

Criminal Liability

Employers can be held to be either directly or vicariously liable

for the criminal behaviour of their employees.

Direct liability for organisations or companies refers to the class

of liability that occurs when it permits the employee's action. Lord

Reid in Tesco Supermarkets Limited v Nattrass[xx] formulated that

this transpires when someone is "not acting as a servant,

representative, agent or delegate" of the company, but as "an

embodiment of the company"[xxi]. When a company is involved in an

action, this principle usually relates to the conduct of directors

and company officers when those individuals are acting for or "as

the company". Being that directors can assign their

responsibilities, direct liability may encompass those employees who

act under that delegated authority. The employer may be directly

liable for the crime in cases where it may be demonstrated that a

direct act or oversight of the company caused or accepted the

employee's perpetration of the crime.

Where the prosecution of the crime involves substantiation of mens

rea[xxii], the company cannot be found to be vicariously liable for

the act of an employee. The company may still be found vicariously

liable for an offence committed by an employee if the offence does

not need mens rea[xxiii] for its prosecution, or where either

express or implied vicarious liability is produced as a consequence

of statute. Strict liability offences are such actions. In strict

liability offences and those that are established through statute to

apply to companies, the conduct or mental state of an employee is

ascribed to the company while it remains that the employee is

performing within their authority.

The readiness on the part of courts to attribute criminal liability

to a company for the actions of its employees seems to be

escalating. This is demonstrated by the Privy Council decision of

Meridian Global Funds Management Asia Ltd v Securities

Commission[xxiv] mentioned above. This type of fraudulent activity

is only expected to become simpler through the implementation of new

technologies by companies. Further, the attribution of criminal

liability to an organisation in this manner may broaden to include

those actions of employees concerning the abuse of new technologies.

It is worth noting that both the Data Protection Act 1998[xxv] and

the Telecommunications (Lawful Business Practice)(Interception of

Communications) Regulations 2000[xxvi] make it illegal to use

equipment connected to a telecommunications network for the

commission of an offence. The Protection of Children Act 1978[xxvii]

and Criminal Justice Act 1988[xxviii] make it a criminal offence to

distribute or possess scanned, digital or computer-generated

facsimile photographs of a child under 16 that are indecent.

Further, the Obscene Publications Act 1959[xxix] subjects all

computer material making it a criminal offence to publish an article

whose effect, taken as a whole, would tend to deprave and corrupt

those likely to read, see or hear it. While these Acts do not of

themselves create liability, they increase the penalties that a

company can be exposed to if liable for the acts of an employee

committing offences using the Internet.

[i] Broom v Morgan [1953] 1 QB 597.

[ii] Employees Liability Act 1991 (NSW).

[iii] G E Dal Pont, Law of Agency (Butterworths, 2001) [1.2].

[iv] Ibid [22.4].

[v] Singapore Broadcasting Association, SBA's Approach to the

Internet, See Century Insurance Co Limited v Northern Ireland Road

Transport Board [1942] 1 All ER 491; and Tiger Nominees Pty Limited

v State Pollution Control Commission (1992) 25 NSWLR 715, at 721 per

Gleeson CJ.

[vi] Tiger Nominees Pty Limited v State Pollution Control Commission

(1992) 25 NSWLR 715.

[vii] Bugge v Brown (1919) 26 CLR 110, at 117 per Isaacs J.

[viii] unreported decision in Warne and Others v Genex Corporation

Pty Ltd and Others -- BC9603040 -- 4 July 1996.

[ix] [1912] AC 716

[x] [1912] AC 716, Lord Shaw of Dunfermline at 739 [xi] [1912] AC

716 [xii] (1949) 79 CLR 370 at 381 [xiii] Ibid.

[xiv] [1952] 1 TLR 101 (CA).

[xv] [1995] 2 AC 500

[xvi] see Lloyd v Grace, Smith & Co. [1912] AC 716 [xvii] see

Armagas Limited v Mundogas S.A. [1986] 1 AC 717 [xviii] Demott,

Deborah A. (2003) "When is a Principal Charged with an Agent's

Knowledge?" 13 Duke Journal of Comparative & International Law. 291

[xix] [1971] 2 QB 711 [xx] [1972] AC 153 [xxi] ibid, at 170 per Lord

Reid [xxii] See Pearks, Gunston & Tee Limited v Ward [1902] 2 KB 1,

at 11 per Channell J, and Mousell Bros Limited v London and North-

Western Railway Company [1917] 2 KB 836, at 843 per Viscount Reading

CJ.

[xxiii] See Mousell Bros Limited v London and North-Western Railway

Company [1917] 2 KB 836, at 845 per Atkin J.

[xxiv] [1995] 2 AC 500.

[xxv] Data Protection Act 1998 [UK]

[xxvi] Telecommunications (Lawful Business Practice)(Interception of

Communications) Regulations 2000 [UK] [xxvii] Protection of Children

Act 1978 [UK] [xxviii] Protection of Children Act 1978 and Criminal

Justice Act 1988 [UK] [xxix] Obscene Publications Act 1959 [UK]

Regards,

Craig Wright (GSE-Compliance)

Craig Wright

Manager of Information Systems

Direct : +61 2 9286 5497

Craig.Wright () bdo com au

+61 417 683 914

BDO Kendalls (NSW)

Level 19, 2 Market Street Sydney NSW 2000

GPO BOX 2551 Sydney NSW 2001

Fax +61 2 9993 9497

<a href="http://www.bdo.com.au/" rel="nofollow">http://www.bdo.com.au/</a>

</pre>

Enforcing Law on the Internet.

Posted by Craig Wright on 26 February 2008

The Internet remains the wild, wild, web not because of a lack of laws, but rather the difficulty surrounding enforcement. The Internet’s role is growing on a daily basis and has reached a point where it has become ubiquitous and an essential feature of daily life both from a personal perspective and due to its role in the international economy. The recently released “Creative Britain; new talents for the new economy”[1] framework paper has demonstrated a reversal of many of the positions formerly held by the British government. This new position is likely to require internet service providers to take action on illegal file sharing, as a consequence leaving intermediaries liable if they fail to take action.

If an ISP is to be held liable for authorisation as an intermediary, it must have knowledge, or otherwise deduce that infringements are proceeding.[2] Although, intermediaries commonly monitor their systems and have the means to suspect when infringements are occurring, Internet intermediaries also require the authority to prevent infringement if they are to be held liable for authorisation, a condition that entails an aspect of control.[3] The government’s proposal[4] requires monitoring from the destination ISP places the responsibility firmly on the local provider of Internet services. Though this may seem unfair to many, as source ISPs may be located in any location in the world and can easily move when facing restrictions, holding the destination ISP responsible for monitoring content would appear as the only feasible solution as it is infeasible for the destination ISP to provide services within the UK from other locations.

It is clear that a framework similar to that proposed by Mann and Belzley[5] or by Lichtman & Posner[6] is needed to effectively control infringements over the Internet and that such a solution is economically the most effective solution. The proposed strategy of the British government[7] is unlikely to be popular at first. Recommendations for a French style system of three strikes[8] would require additional monitoring from the ISP and also introduce a possibility of infringing the customer’s privacy rights[9]. The concurrence of privacy legislation and the need for additional controls will make the introduction of these initiatives interesting to say the least. The pirates are starting to replace the Cowboys changing the wild, wild, web to that of the proverbial high seas. The need for sensible legislation that will limit the increasing criminal activity while also considering the impacts on the law-abiding users of the internet is clear. The proposed strategy of the British government[10] offers great potentials, but will come down to the implementation as to whether these are successful. The Internet is entering its final stage of development, legislative control.

Anonymity and leaky international boundaries impede the prosecution of the primary malfeasors. Internet intermediaries, especially those that service end users are both easily identifiable and have many of their assets within the UK. The malfeasors require payment intermediaries to process their transactions. The “Creative Britain” strategy[11] has provided little in either incentive or regulation concerning these actors. Payment intermediaries have the technological competence to avert detrimental transactions at the lowest cost of any intermediary with the largest potential payback. Further, in many cases the largest effect on the Internet pirates is provided through economic means. As such, the legislation should be adapted to mandate internet intermediaries control illicit transactions and consequently protect the public interest. To do this effectively will require more than just a mandate that Internet intermediaries monitor illicit activity. It will be also necessary to regulate liability in order to protect Internet intermediaries from the actions that they are required to take in order to protect the Internet. The constant seesawing between policy positions that has occurred in respect of the Internet demonstrates that we have not achieved this yet.

The position of the British Government[12] with its recent moves to call Intermediaries to action in the formation of a voluntary body to stop Intellectual Property violations is a start to the reforms that are needed. The problem is well defined in this call for reform, however, the call for voluntary changes are unlikely to bring about the required changes. Intermediaries have the capability to stop many of the transgressions on the Internet now, but the previous lack of a clear direction and potential liability associated with action rather than inaction[13] remains insufficient to modify their behaviour. Even in the face of tortuous liability, the economic impact of inaction is unlikely to lead to change without a clear framework and the parallel legislation that will provide a defence for intermediaries who act to protect their clients and society.

[1] Department for Culture, Media and Sport, 22 Feb 2008

[2] Ibid, Gibbs J at 12-13; cf Jacobs J at 21-2. See also Microsoft Corporation v Marks (1995) 33 IPR 15.

[3] Ibid, University of New South Wales v Moorhouse, supra, per Gibbs J at 12; WEA International Inc v Hanimex Corp Limited (1987) 10 IPR 349 at 362; Australasian Performing Right Association v Jain (1990) 18 IPR 663. See also Lim YF, 199-201; S Loughnan, See also BF Fitzgerald, “Internet Service Provider Liability” in Fitzgerald, A., Fitzgerald, B., Cook, P. & Cifuentes, C. (Eds.), Going Digital: Legal Issues for Electronic Commerce, Multimedia and the Internet, Prospect (1998) 153.

[4] The “Creative Britain; new talents for the new economy” strategy was issued on the 22nd Feb 2008 and is available online.

[5] Mann, R. & Belzley, S (2005) “The Promise of the Internet Intermediary Liability” 47 William and Mary Law Review 1 at 27 July 2007]

[6] Lichtman & Posner (2004) “Holding Internet Service Providers Accountable” [7] Supra Note 4.

[8] One of the current recommendations is based on the three-strikes policy began in France late last year. The violation of digital rights management or other similar infringements including provisions for Internet users that are caught distributing copyrighted files would require the ISP to send an e-mailed warning to the infringing user. The second offence would then have file-sharers face a temporary account suspension. On a third offence, they would be entirely cut off from the Internet. (See also http://arstechnica.com/news.ars).

[9] The UK Privacy & Electronic Communications (EC Directive) Regulations 2003 and Directive 2002/58/EC (the E-Privacy Directive) may create problems. The juxtaposition of privacy versus control creates a fine line that is easily crossed.

[10] The “Creative Britain; new talents for the new economy” strategy was issued on the 22nd Feb 2008 and is available online.

[11] Supra Note 4.

[12] Supra Note 4.

[13] The most obvious example of this action can be found in the history of the Communications Decency Act. Congress directly responded to the ISP liability found in Stratton Oakmont, Inc. v. Prodigy Services, 23 Media L. Rep. (BNA) 1794 (N.Y. Sup. Ct. 1995), 1995 WL 323710, by including immunity for ISPs in the CDA, 47 U.S.C. § 230(c)(1) (2004) (exempting ISPs for liability as the “publisher or speaker of any information provided by another information content provider”), which was pending at the time of the case. Similarly, Title II of the Digital Millennium Copyright Act, codified at 17 U.S.C. § 512, settled tension over ISP liability for copyright infringement committed by their subscribers that had been created by the opposite approaches to the issue by courts. Compare Playboy Enters., Inc. v. Frena, 839 F. Supp. 1552, 1556 (M.D. Fla. 1993) (finding liability), with Religious Tech. Ctr. v. Netcom, Inc., 907 F. Supp. 1361, 1372 (N.D. Cal. 1995) (refusing to find liability).; The fear of being seen as a publisher rather than mere conduit has resulted in many ISPs and ICPs to a state of inaction.

Source: gse-compliance.blogspot.com

Craig Wright, Security Hero

April 4th, 2008

By Stephen Northcutt

Craig Wright certainly qualifies as a security hero! He has written articles and books on security and has nearly every SANS and GIAC certificate available (including platinum). He is a GIAC Technical Director, and jack-of-all-trades, master of a few, and all of us at the security laboratory thank him for his time!

Craig, I see that you are qualified in a number of disciplines including having just completed a master’s degree in law. So why did you choose information security?

When I was young, information security didn’t really exist as a career. I started doing some simple programming tasks and moved into a role as a SunOS 4.1 administrator. We had a custom developed database on the system and, at that point, security was generally the least of anyone's concerns. I had been tasked with ensuring that the data on the system remained secure and that the system was available, but there was no budget for security. Back in the days before the Web, Gopher proved a great tool for finding information. What I started learning about back then was just how many vulnerabilities exist.

I got into a little bit of trouble from time to time when I would demonstrate some of the vulnerabilities. This led to a reputation as the guy who could "break into stuff" - something that was both good and bad. When systems needed to be configured I would be consulted, but I also found that I was would be blamed when anything went wrong.

So, of course, when firewalls came about in the mid-90s, I was the one that they where handed to. I stayed in security as it is something that I do well and it allows me to give back to the community.

So, how did you learn about firewalls back then?

Back then it was even more of the "wild wild web" than now. I cringe at some of the things we did. I started by putting together bits and pieces that I'd dug up and basically cobbled together a halfway decent firewall using the firewall Toolkit. Back then code was available, and a lot simpler, so much of the learning process was really playing. This followed when I started working for an ISP. I was basically given a copy of Checkpoint firewall-1 version 2 and expected to know it by the end of the week.

This wasn't as bad as it seemed since having worked with the firewall Toolkit and Gauntlet, I found Checkpoint to be easy.

What about "security cowboys" in the 90s? Back then it seemed that the security methodology was to download some security tool that compiled on a Sun 3, how were those times for you?

In the 90s most of us were, basically, cowboys. Back then, methodologies didn't exist; and if you wanted some level of functionality, you had to make it yourself. Mistakes were a common occurrence, but what really mattered was if you learned from those mistakes. The biggest change for me was taking a role in the Australian Stock Exchange where I managed the firewalls and other security devices. Working in an environment with a six 9's requirement for uptime was a real eye-opener.

More than anything else, the ASX taught me the benefits of a well planned project. I also learnt VMS. The ASX beat the cowboy out of me.

How do you build your skills?

Practice, practice, practice. And, add to that a lot of reading.

Also, since I have to commute, I have used text to audio conversion software and changed papers to MP3 files, so I listen to these as I drive. This takes care of the theory, leaving time to practice the various tools and techniques at home. Add to that a huge amount of training from SANS and others, and an inability to get out of Uni, and that about covers it.

These days, it has become even simpler. I act as an editor for a technical publisher and also author my own papers and books. Getting paid to conduct technical reviews is a great way to stay on top of things.

I noticed that you have an eclectic collection of qualifications. Has this helped your security career, or is it just out of general interest?

I have found that knowledge in a wide range of topics makes it easier to understand the viewpoint other people are coming from. Having studied finance and law has made my role as an auditor easier. I’m sure that many of my clients do not see it this way since I have a habit of pointing out obscure points of law they may not be complying with, but my role as an auditor is to point out risk to management.

I stay sane as I’ve learnt that it is not my problem on how they act to what I’ve pointed out to them. As long as I can ensure that they have an accurate understanding of risk they face, I’ve done my job.

Statistics and data mining skills have helped this. I get told all the time that there are not enough sources of data to be able to create adequate quantitative risk models. This is where I find that a mathematical foundation would help many in the industry. Methods such as longitudinal data analysis provide the means to scientifically model an organisation's risk. The difficulty is that these methodologies do not lend themselves to simple tools and require an analysis focused on the particular organisation.

Do you see security as an art form or science?

In practice it should be progressing towards more of a science than an art. However, very few people treat it this way. Unfortunately, marketing and hype obscures much of what is really important. Many of the simple practices that make a site secure don’t lead to an opportunity to sell services. As such, many of these are ignored.

On top of this, many people have the idea that the only way to test a system is by using a black box format in an attempt to simulate (falsely) what a “hacker” would do. I mean, I am happy to take on organisation's money and spend a day or two doing basic preliminary investigations that any script kiddie can do if they require it of me, but I'm much happier just getting the information from them and saving both of us time and them money. I see far too much hype around the skills related to attacking and breaking into a system and, by far, not enough effort into securing systems. After all, it takes far more skill to properly secure a system than it does to break into one.

So what do you see as the major problem with auditing and compliance?

I have to say the major problem is that people attempt to tick a box rather than fix a problem. Often, more effort is put into avoiding fixing a vulnerability or other issue than would be taken to correct it.

Another problem is that the industry is really geared away from fixing the problems. We seem to do our best to avoid confronting clients with the risk that they actually face. Many people say that compliance regimes such as SOX do little to secure a system. The truth is that this is not related at all to the compliance regime but to the general avoidance of them. As a case in point, we have been engaged to re-perform tests for SOX clients that are unrelated to the security of the system. On instructing the client that the controls they have implemented will not make them compliant with the requirements of SOX, we have been instructed to simply rerun the test of the controls in place.

So, it is not to say that SOX does not lead to a secure system, but rather people do their best to avoid it. In other cases, I have seen companies create their own stored procedures on a database to obscure data fields so that they can pass a PCI audit. The auditor is never given enough time to test all the systems, so hiding what is actually occurring is an easy way to become “compliant”. The silly thing is that, in many instances, the amount of effort to hide non-compliance is far greater than what would be required to make the system compliant.

So why do organizations try to avoid securing systems in your view? It certainly seems like there are two basic keys to information assurance, configuring systems correctly and detecting when the configuration fails. Yet, proper configuration does not seem to get much emphasis.

There seems to be a lack of knowledge and understanding about security that has not disappeared over the years and, if anything, has gotten worse. As security professionals, we have to take a lot of the blame. Many of us spend our time bickering over obscure issues and things that don’t really matter. We really need to step back and take a risk-based approach. Some training in economics and finance would be a great benefit to many people in the industry.

I certainly hear you about the bickering over obscure issues; I love Schneier's point in Beyond Fear, we tend to love Security Theater. What benefit do you see that studying economics and finance would offer the average Security professional?

We might be able to start having a risk-based approach. At the moment, too many of the issues in security come down to personal preferences. We really need to stand back and look at the true cost. Rather than installing that nice new toy with its six-figure price tag, maybe a little bit of time looking through and testing a few configuration standards (such as those from SANS and CISecurity.org) would benefit.

So, where do you see yourself in the future?

Ideally, I want to move into a technical research role. In my ideal position I would be either CTO and security evangelist or lab director. At the moment, I conduct research in my own time. The ideal would be having someone pay me for doing what is essentially my hobby.

Source:

https://web.archive.org/web/201005...

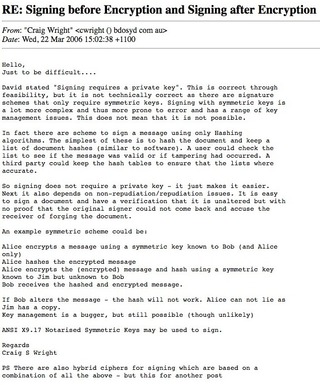

WEDNESDAY, 30 JANUARY 2008

Trusting electronically signed documents.

Both electronic and paper documents are subject to tampering. The discovery of collisions has demonstrated that the process of signing a hash signature is not without its own vulnerabilities. In fact, the collision allows two versions of the document to be created with the same hash and thus same electronic signature.

It was stated in a response to an earlier post that “Electronic contracts do not have to be re-read when they are returned because there's generally no mechanism (unless it's built into the electronic process) to alter the contract terms, scratch out a line, insert text, etc. What you send is what is being signed.”

Unfortunately this is not true.

An attacker could generate two documents. One states:

Sell at $500,000.00 (Order 1)

The second document states:

Sell at $1,000,000.00 (Order 2)

Our attacker wants to have the second document as the one that is signed. By doing this they have increased the sale contract by $500,000.

Confoo is a tool that has been used to demonstrate two web pages that look different, but have the same MD5 hash (and there are also issues with other hash algorithms as well).

Digital signatures typically work using public key crypto. The document is signed using the private key of the signer. The public key is used for verification of the signature. The issue is that public key crypto is slow. So rather then signing the entire document, a hash of the document is signed. As long as the hash is trusted, the document is trusted. The concern is that collisions exist.

So back to the issue. Our attacker takes order 1 and order 2 and uses the Confoo techniques (also have a look at Stripwire).

The client is sent a document that reads as “order 1” and they agree to buy a product for $500,000. As such they sign the order using an MD5 hash that is encrypted with the buyers private key. Our attacker (using Confoo style techniques) has set up a document with a collision. Order 1 and Order 2 both have the same hash.

Our attacker can substitute the orders and the signed document (that is a verified hash) will still verify as being signed.

The ability of Microsoft Word to run macros and code makes it a relatively simple attack to create a collision in this manner.

So, electronic documents do need to be re-read – but it is simpler in that there are tools to verify these. Ensure that the Hash used is trusted and even use multiple hashes together.

Further Reading:

http://www.doxpara.com/slides/Black%20Ops%20of%20TCP2005_Japan.ppt

http://www.blackhat.com/presentations/bh-jp-05/bh-jp-05-kaminsky/bh-jp-05-kaminsky.pdf

Tech aside.